Entries in Interactive (15)

New Publications

If you haven't already done so, make sure to check out this months issue (April 2013) of Architectural Design (AD) titled Computation Works: The Building of Algorithmic Thought. Edited by Xavier De Kestelier and Brady Peters, this issue focuses on emerging themes in computational design practices, showcasing built and soon-to-be-built projects and providing a state of the art in computational design.

In addition to some amazing articles written by Daniel Davis, David Rutten, Daniel Piker, Giulio Piacentino, Arthur van der Harten, Thomas Grabner and Ursula Frick, and many more... it also features an article that I co-authored with Jason K. Johnson titled Firefly: Interactive Prototypes for Architectural Design. Make sure to check it out, as it's definitely worth the read!

In addition, make sure you also take a look at the book Prototype! edited by Julian Adenauer and Jorg Petruschat which was published by Form+Zweck last summer (2012). Written by leading individuals at world renown design labs and research centers, this book offers a unique compilation of articles centered around the topic of advanced forms of prototyping. In my article, IDE vs. IPE: Toward and Interactive Prototyping Environment I discuss the need to shift toward a more visually oriented Interactive Prototyping Environment (IPE) which addresses the limitations found in the existing IDE paradigm and opens up creative new opportunties for artists and designers.

Firefly + Kinect

Like many people, I've been anxiously awaiting the official release of the Microsoft SDK for the Kinect. Now, that its officially out, I spent some time over the last two weeks working on a set of Kinect related components that I hope to include in the next release of Firefly (1.007). The first component I tried to implement was the Skeleton Tracker... and I have to admit that the result are quite promising. It's surprisingly fast and as long as you stay within the specified range of the sensor, the results are quite good. Using this component I put together two very quick demo videos.

There has been a big push over the last decade to develop novel 3D technology for multimedia displays (whether its new ways for stereoscopic projection, refractive lens, etc.) One of the most successful implementations and inventive (in my opinion) was Johnny Chung Lee's reverse engineering of the Wii sensor bar. Another recent example (and equally impressive) is this hack using the Kinect sensor and head tracking.

The video above is my first attempt to create a real-time 3D display system within Grasshopper using Firefly's new Skeleton Tracker component and some simple camera manipulation. The Skeleton Tracker component outputs a list of points (click here for further explanation). From there, I simply use the Horster Camera Control component (another 3rd party plugin for Grasshopper) to position the camera at the viewers head and the camera target at a point in space locating the Kinect sensor. It really is that easy. Turn on some real-time shadows and you've got a real-time 3D display. It still needs some tweaking but it's pretty fun to play with.

This next demo shows how easy it is to turn gestural movements into physical actuation using an Arduino. The setup is very simple. My z-value of my right hand (basically the height of my hand) controls the brightness value (or Pulse Width Modulation - PWM) of the LED. My left hand controls the servo. When my hand is by my side, the servo goes to position 0 and if I raise my hand above my head the servo moves to position 180. So simple. Of course, this could be expanded to control all sorts of things... perhaps that is next.

Firefly 1.006 New Features

I was extremely excited to announce the official release of Firefly version 1.006 earlier this week. For those who aren't familiar with Firefly, allow me to provide a short introduction. Firefly is a set of software tools dedicated to bridging the gap between Grasshopper (a free plug-in for Rhino), the Arduino micro-controller, the internet and beyond. It allows real-time data flow between the digital and physical worlds and will read/write data to/from internet feeds, remote sensors and actuators, mobile phone devices, the Kinect, and more. There are a lot of new components in this release (including the Arduino Code Generator, Upload to I/O Board, UDP and OSC Listeners and Transmitters, XML Search, and State Detection) that I thought it would be a good idea to put together a few videos showing some of the latest features. So without further ado...

This first video shows the potential of the new Arduino Code Generator and the Upload to I/O Board components. In my opinion, one of the greatest limitations of the previous versions of Firefly was that your Arduino board always had to be tethered to your computer via the USB cable. This was because Firefly communicates back and forth to Grasshopper through serial communication. However, sometimes you just want to use Grasshopper (and its visual programming interface) to prototype your design and then unplug it from your computer to run off external power. Now, you can!

The Arduino Code Generator attempts to convert any Grasshopper definition into Arduino compatible code (C++) on the fly. It works by detecting components that are 'upstream' from the Uno/Mega Write component. The Code Generator checks the component ID against a library of custom C++ functions which then get added to the code if there is a match. The code can be simultaneously saved as a .pde (Arduino Sketch) file to be opened in the Arduino IDE.

In addition, there is also a new Upload to I/O Board component which allows you to upload any sketch (could be from the Code Generator or any other sketch) directly to your Arduino board from within the Grasshopper environment. A lot of stuff happens behind the scenes with this component. Essentially it creates a dynamic MakeFile and calls a shell application to convert the .pde file into a .cpp (C++) file and then into .hex code (machine readable code) to be uploaded to the microcontroller. Note: WinAVR is required to be installed on your machine in order to properly upload sketches to your board. You can download the latest version here.

There are also a lot of great network tools included in this release, including the UDP and OSC Listener and Transmitter components. OSC (Open Sound Control) messages are essentially specially formatted UDP messages which can be particularly handy when you want to send some sort of information across a network (either wirelessly or LAN). OSC messages are particularly useful because each message contains some metadata and a value, giving you more information about what type of data the message contains. These new components open up a whole new world of possibilities by allowing you to send/receive data from smart phones (iphone or android) or by sharing documents among friends or colleagues over a network.

The video above uses the BreathOSC application (free from the iphone app store) developed by Thomas Edwards to simulate wind effects in Grasshopper. Simply breathe into the microphone and an OSC message is sent to a specified IP address on a UDP port. I then simply use the OSC Listener to decode the message and uses its value to create a wind vector to drive the Kangaroo (another 3rd party plugin for Grasshopper) wind simulation. Daniel Piker, the developer of Kangaroo, helped setup this demo... and I have to say... it's quite fun.

Another useful networking application for smart phones is TouchOSC (available for both iphone and android). It supports sending and receiving Open Sound Control messages over a Wi-Fi network using the UDP protocol. You can also create your own interfaces using the TouchOSC Editor and sync them directly to your phone. In this example, I've created a simple layout to control a few LED's, a tri-color LED, and a standard servo using the new OSC Listener in Firefly. This is just a simple test, but the sky is the limit with this type of control over mobile phone interface design.

If you are interested in learning more about Firefly, check out our website at: http://www.fireflyexperiments.com/

The website has a lot of good tutorials and examples to get you up and running in no time. As always, if you have a suggestion or want to send us a comment, you can reach us at info@fireflyexperiments.com

Acknowledgements:

It is without a doubt that this release would not have been possible without the tremendous support from Prof. Panagiotis Michalatos at Harvard's GSD. His guidance over the last 6 months strongly influenced the development of the Firefly_X toolset and I owe him a great debt of gratitude for his assistance. Firefly is built upon the Grasshopper plug-in for Rhino, both developed by Robert McNeel and Associates. The Arduino language syntax is based on Wiring by Hernando Barragan. The Arduino environment is based on Processing byBen Fry and Casey Reas, and is now supported by an amazing team of software and hardware developers that continue to refine and expand its capabilities.

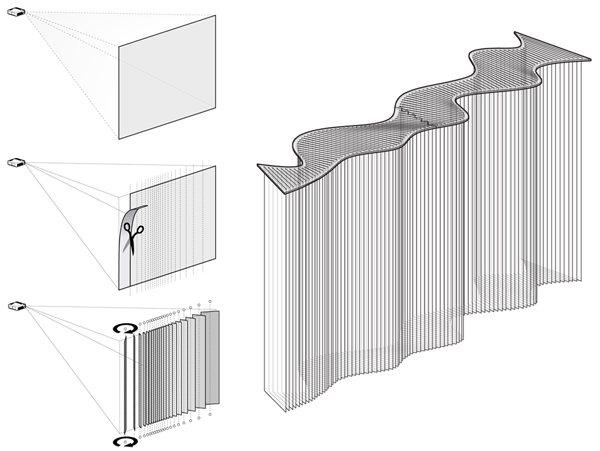

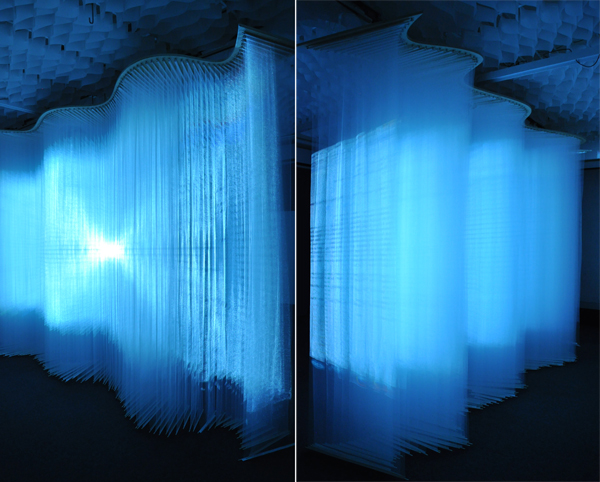

Projection One

Projection One is an interactive art installation that was recently unveiled at Harvard's Graduate School of Design. Conceived and built by Andrew Payne and fellow GSD student, Eddy Man Kim, Projection One strives to challenge the way in which users perceive projection art. We started by changing the projection surface. Our idea was simple - take the traditional screen and cut it up into strips of variable widths. Then rotate those strips to create a three dimensional surface; adding depth to create a more volumetric or spatial experience out of the projection schemes. The overall surface measured approximately 13'(length) x 9'(height) x 3'(depth).

Adding depth to a projection surface only works if the material characteristics of the screen is transparent enough to allow for the light from the projector to pass through. We spent a good deal of time investigating various types of fabrics, and even string; but ultimately found that Tulle provided just the right amount of transparency and material density. Tulle is lightweight net-like fabric traditionally used in wedding veils and other ornamental garments.

Acknowledgements

First, we would like to thank Professor Panagiotis Michalatos. It is without a doubt that this project would not have been realized without his tremendous help and support. All of the visualizations were developed as scripts written in C# and used several of the sawapan motion and audio libraries.

Contact Us

Like what you see or want to know more about how to bring this installation to a city near you? Contact us.

Smart Geometry - Follow Up

We're just a week removed from the Smart Geometry conference held in Copenhagen, and now that the dust has settled I wanted to post a follow up to show some of the amazing work that was accomplished in just 4 short days. Our cluster group was quite inspiring and I had a great time meeting/working with all of them. Below is a brief video recap of the four day workshop (put together by Daniel Piker and Robert Cervellione). Find our more about our cluster at the Smart Geometry website. Enjoy.

Using Grasshopper to Control a Pan/Tilt Servo

The above is a follow up video to the posting I made last week showing how we can use a Wii nunchuck to control parametric data in Grasshopper. However, in this video, we are looking at reversing the flow of information and using parametric data (numeric sliders in Grasshopper) to control two different servos. In this case, one slider controls the panning servo, while the other controls the tilting servo.

In this demo, we need to set up two different files. The first is the Grasshopper definition (written for ver. 0.6.0019) which sends the numeric slider information over the serial port via a custom VB.NET component. This component formats the two variables (the pan slider and the tilt slider) into a string that is separated by a comma. This comma will serve as our delimeter when we read the data over the serial port on the Arduino side. You can download the Grasshopper source file below.

On the Arduino side, we first have to understand that serial communication receives individual bytes of information as a stream. This can become complicated because most data types are more than 1 byte... so you have to understand the data type and then convert it on the Arduino side to the format you are looking for. In this example, we are reading a string into the buffer and then working through each individual byte until we reach our delimeter (don't forget about the comma). Once we reach the comma, we pass the data that has been read into the buffer into a variable that ultimately controls one of the servos. We do a similar process for the second set of bytes after the comma. The Arduino source code can also be downloaded below.

Again, I'd like to thank Robert Lee for his explanations and insights on how to set up this demo.

Source Files:

Grasshopper_controlling_PanTilt_Servo.pde (Right-click to 'Save Link As') This is the Arduino source code needed to program the micro-controller. This file was written for Arduino IDE ver. 0017.

Grasshopper to PanTilt Servo.3dm (Right-click to 'Save Link As') This is the Rhino file that accompanies the Grasshopper definition.

Update: Please use the new FireFly toolbar to facilitate the Grasshopper to Arduino connection. Click here for more information.

Note: These documents are in the public domain and are furnished "as is". The author, Andrew Payne, makes no warranty, expressed or implied, as to the usefulness of the software and documentation for any purpose. This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-sa/3.0/us/

Using a Wii Nunchuck to Control Grasshopper

The video above demonstrates how we can feed a stream of live sensor data (in this case, I chose to use the accelerometer data from a Wii nunchuck) into Grasshopper to control a parametric model. I'll be the first to admit that this is not a 'new' demo... In fact, this demo was heavily inspired (in concept) by this video that was created in 2008 that shows how a Wii nunchuck can control a mechanical arm in 3D Studio Max .

However, my own research was primarily concerned with understanding how to get the sensor data into Grasshopper (and how we can also reverse this flow of information to get Grasshopper to control servos, motors, LED's, etc...) in order to create more complex and sophisticated digitial and physical environments.

Before we get too far, I'd like to say thank you to Robert Lee for his insights on how to setup the VB.NET listener.

Now onto the more technical aspects. Let's start with the hardware setup. I am sending the accelerometer data from the Wii nunchuck to an Arduino Diecimila micro-controller which allows me to format the data into a block of code that the Grasshopper VB.NET listener will be able to understand. I could have spliced up my Wii controller to connect the various parts of the circuit to the appropriate pins on the Arduino board, but I decided to buy a wonderful and cheap little adapter that simplifies the entire process. You can buy your own from these online stores:

- FunGizmos.com. International shipping for $1 more.

- Tinker.it (UK)

- Little Bird Electronics (Australia)

- Sparkfun. Ships domestic & internationally. Be sure to order header pins too!

- Freduino.eu (EU)

Now, that we've properly connected the Wii nunchuck to our micro-controller, we'll need to program our Arduino board so that it can read the incoming sensor data and send it over the serial port. For this, I have used a custom built library written by Tod E. Kurt that was designed to help the communication process between a Wii controller and the Arduino. You can download the "nunchuck_funcs.h" library from his website at: http://todbot.com/blog/. You can also get the source file needed to program your own board by clicking on the links below.

On the Grasshopper side of things, we need to create a custom VB.NET listener component that can read a string of data coming in over the serial port. The nice thing about this listener is that it will display any string that is being sent from the Arduino... What you do with that string is another matter... but in this example, we are feeding a string of information that is separated by a comma ",". The comma will be our delimeter which essentially tells us where to split the string so we can extract the integer value on the left side of the comma (which cooresponds to the X-value of the accelerometer) and the integer value on the right side of the comma (which will cooresponds to the Y-value of the accelerometer).

Once we have successfully read the data from Grasshopper, we can connect a Timer Component to automatically refresh the solution at a given time interval. In the video above, I was able to refresh the sensor data at 50 millisecond (the smallest time interval that is currently available in Grasshopper) without any lag or delay. You can download both the Rhino file and the Grasshopper definition needed for this demo by clicking on the links below.

Source Files:

Wii Nunchuck to Grasshopper.pde (Right-click to 'Save Link As') This is the Arduino source file. This file was written for Arduino IDE ver. 0017.

Wii Nunchuck to Grasshopper.3dm (Right-click to 'Save Link As') This is the Rhino file that accompanies the Grasshopper definition.

Update: Please use the new FireFly toolbar to facilitate the Grasshopper to Arduino connection. Click here for more information.

Note: These documents are in the public domain and are furnished "as is". The author, Andrew Payne, makes no warranty, expressed or implied, as to the usefulness of the software and documentation for any purpose. This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-sa/3.0/us/

Animaris Rhinoceros Mechanism

With a background in science from the University of Delft in Holland, Theo Jansen's kinetic sculptures inspire a sense of wonder at the complexity of nature. For the past 10 years, he has explored the idea of making mechanisms that walk in the wind, ultimately generating a series of "beasts" that rome the beaches living out their own lives. While there are a full series of sculptures, I found the Animaris Rhinoceros sculpture particularly interesting. I decided that I would need to create an interactive digital model of the system to understand the mechanics behind the design. The digital model uses Inverse Kinematics and Bones in 3D Studio Max to create the connections needed for the machine. Essentially, each side of the model is rigged with Inverse Kinematic solvers and then parented to an invisible "Crank" in the middle. By rotating the center crank (named Crank1) around the Y-axis, the system begins to "walk" forwards or backwards depending on the rotation of the crank. Once the initial rig is created, it can be instanced to create the full system as shown in Mr. Jansens actual sculpture.

Download Animaris Rhinoceros.zip (3D Studio Max 9 size: 39k)

Note: This software and its documents are in the public domain and are furnished "as is". The author, Andrew Payne, makes no warranty, expressed or implied, as to the usefulness of the software and documentation for any purpose. This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-sa/3.0/us/

Johnny Chung Lee - Head Tracking and Interactive Displays

It's been a while since I have seen something that has truly changed the way I look at technology... But a few of the projects designed by Johnny Chung Lee, a Ph.D. graduate student at Carnegie Mellon's Human-Computer Interaction Institute, are quite remarkable in their ingenuity. While many of his projects are applicable becuase of their use of products that are readily available, two projects of his stand above the rest. If you haven't seen these yet, make sure to check out his demonstrations of "Head Tracking for Desktop VR Displays using the Wii Remote" and "Foldable Interactive Displays".

Head Tracking for Desktop VR Displays and other Wii Projects

Projector-Based Location Discovery and Tracking with Foldable Displays

About Johnny Chung Lee

Tensegrity Wall

After a long time coming, I finally decided to continue my research on actuated tensegrity systems. I had already created a fully rigged tensegrity module whose compression member's rotation was driven by the distance from the apex of the system to the midpoint. This distance was wired to a slider in the 3D Max file, so the user can easily drive the system by the use of one simple device. However, to complicate matters a little bit, I decided it would be more interesting to rig these modules up into a system, or a wall type structure where all the modules were connected and thus getting more displacement out of the design. Through a little more math and a lot more time, I was able to create a 4x4 wall system that is fully controlled by the same slider that controls the vertical movement of an actuator inserted in the middle of each module (which would ultimately control the distance from the apex to the midpoint). This system could be configured to work with a sensor so that the structure could change shape according to various environmental stimuli.

Download Tensegrity Wall.zip

Note: This software and its documents are in the public domain and are furnished "as is". The author, Andrew Payne, makes no warranty, expressed or implied, as to the usefulness of the software and documentation for any purpose. This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-sa/3.0/us/

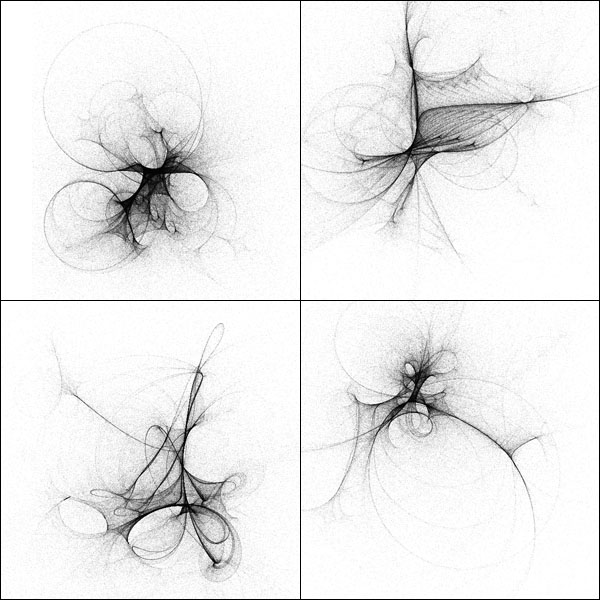

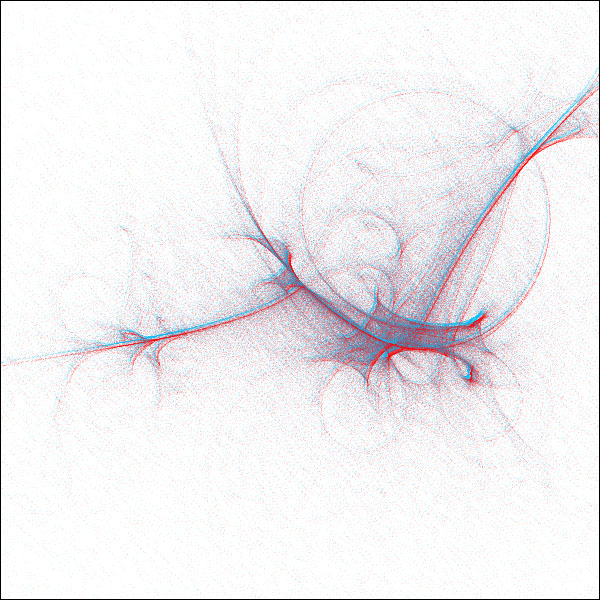

Stereoscopic Processing

According to Josh Nimoy, The Processing environment is the easiest Java compiler / interactive graphics and multimedia programming environment known to man. The system can be used to produce locally run pieces, as well as web-embeddable Java applets. After doing a little more research on the latest version of Processing, I came across one of the source codes for a drawing applet created by Theo Calvin, who based his code on an example put forth by Jeffery Tarbell. Where I played a part in this research was taking taking the script and adding a few lines of code in order to create a Stereoscopic Image that would give an illusion of surface "depth" when looking at these drawings through 3D glasses. Each drawing can be generated in a matter of seconds.

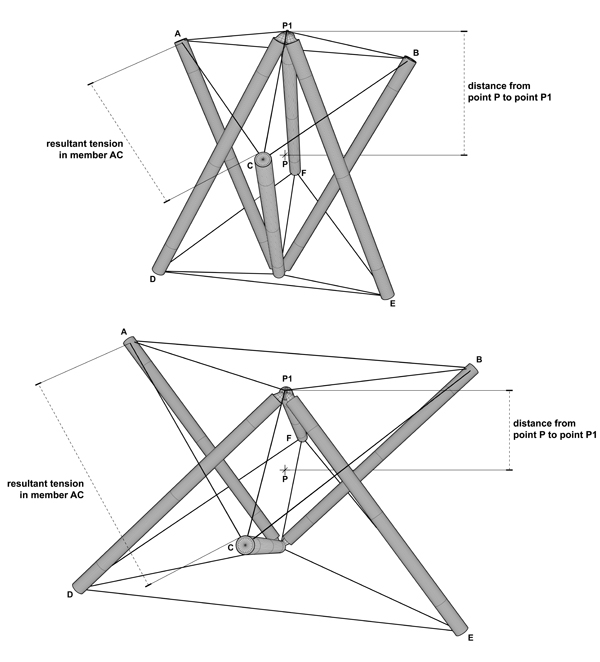

Adaptive Tensegrity

This is a digital model that I created after reading Tristan d'Estree Sterk's essay on actuated tensegrity systems. I've rigged the system to work just like his actuated tensegrity system, so that as the actuator in the middle contracts, the tension members become more rigid, thus making the entire system stronger. The actuated system is one way to create a responsive tensegrity system that can adapt to changing environmental conditions to make a "learning" structure... however you could eliminate the actuator by making the tension members able to expand and contract, much like a muscle. I plan on persuing this line of thought for a future skyscraper, but as yet, is still in a hypothetical stage.

Download Tensegrity Module.zip

Note: This software and its documents are in the public domain and are furnished "as is". The author, Andrew Payne, makes no warranty, expressed or implied, as to the usefulness of the software and documentation for any purpose. This work is licensed under a Creative Commons Attribution-Share Alike 3.0 United States License. http://creativecommons.org/licenses/by-sa/3.0/us/

![[LIFT] architects](/storage/layout/Header_025.png)